Become a Certified Power BI Data Analyst!

Join us for an expert-led overview of the tools and concepts you'll need to pass exam PL-300. The first session starts on June 11th. See you there!

Get registeredJoin us at FabCon Vienna from September 15-18, 2025, for the ultimate Fabric, Power BI, SQL, and AI community-led learning event. Save €200 with code FABCOMM. Get registered

- Microsoft Fabric Community

- Fabric community blogs

- Power BI Community Blog

- Power BI Enhancements You Need to Know – Part 1: T...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Power BI Enhancements You Need to Know – Part 1: The New Fabric Workspace

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

With the launch of Microsoft Fabric, the Power BI Workspace has transformed from a basic reporting repository to a unified data experience platform. If you haven't explored your workspace, you're in for a surprise!

Then vs. Now: Power BI Workspace Evolution

Before Microsoft Fabric, a Power BI workspace mainly allowed:

- Reports – Interactive visuals and insights

- Dashboards – Consolidated KPIs on a single canvas

- Datasets – Published data models

- Dataflows – ETL pipelines for reuse

Simple. Neat. But siloed.

Now with Fabric Integration – A Full-Fledged Data Hub

The Fabric-powered workspace now brings end-to-end data capabilities directly to Power BI users, enabling them to not only visualize but also ingest, store, transform, analyze, and automate – all in one place.

Here's what's new.

Microsoft Fabric – Key Capabilities Categorized

Microsoft Fabric offers a wide range of tools and capabilities to help you build end-to-end data solutions. These capabilities are categorized into the following major sections:

- Visualize data

- Get data

- Store data

- Prepare data

- Analyze and train data

- Track data

- Develop data

- Others

Visualize data

Present your data as rich visualizations and insights that can be shared with others.

- Dashboard: Build a single-page data story.

- Exploration (preview): Use lightweight tools to analyze your data and uncover trends.

- Paginated Report (preview): Display tabular data in a report that's easy to print and share.

- Real-Time Dashboard: Visualize key insights to share with your team.

- Report: Create an interactive presentation of your data.

- Scorecard: Define, track, and share key goals for your organization.

Get data

Ingest batch and real-time data into a single location within your Fabric workspace.

- Copy job: Makes it easy to copy data in Fabric. Includes full copy, incremental copy, and event-based copy modes.

- Data pipeline: Ingest data at scale and schedule data workflows.

- Dataflow Gen1 / Gen2: Prep, clean, and transform data.

- Eventstream: Capture, transform, and route real-time event streams to various destinations in desired formats with a no-code experience.

- Mirrored databases (Azure Cosmos DB, PostgreSQL, SQL Database, SQL Managed Instance, Snowflake, SQL Server): Easily replicate data from existing sources into an analytics-friendly format.

- Notebook: Explore, analyze, and visualize data and build ML models. Supports Apache Spark, Python, T-SQL, and more.

- Spark Job Definition: Define, schedule, and manage your Apache Spark jobs for big data processing.

Store data

Organize, query, and store your ingested data in an easily retrievable format.

- Eventhouse: Rapidly load structured, unstructured, and streaming data for querying.

- Lakehouse: Store big data for cleaning, querying, reporting, and sharing.

- Sample warehouse: Start a new warehouse with sample data already loaded.

- Semantic model: Combine data sources in a semantic model to visualize or share.

- SQL database (preview): Build modern cloud apps that scale on an intelligent, fully managed database.

- Warehouse: Provide strategic insights from multiple sources into your entire business.

Prepare data

Clean, transform, extract, and load your data for analysis and modeling tasks.

- Apache Airflow job: Simplifies the creation and management of Apache Airflow environments for end-to-end data pipelines.

- Copy job: Includes full copy, incremental copy, and event-based copy modes.

- Data pipeline: Ingest data at scale and schedule data workflows.

- Dataflow Gen1 / Gen2: Prep, clean, and transform data.

- Eventstream: Capture, transform, and route real-time streams.

- Notebook: Explore, analyze, and build ML models.

- Spark Job Definition: Define and manage Spark jobs.

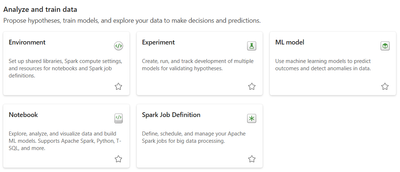

Analyze and train data

Propose hypotheses, train models, and explore your data to make decisions and predictions.

- Environment: Set up Spark compute settings and resources for notebooks.

- Experiment: Create, run, and track multiple models to validate hypotheses.

- ML model: Use machine learning to predict outcomes and detect anomalies.

- Notebook: Analyze data and build ML models.

- Spark Job Definition: Manage Spark jobs for big data processing.

Track data

Monitor your streaming or near real-time data and take action on insights.

- Activator: Monitor datasets, queries, and streams to trigger alerts.

- Copy job: Includes event-based monitoring capabilities.

- Eventhouse: Query streaming and structured data.

- Eventstream: Real-time data transformation and routing.

- KQL Queryset: Run queries to generate shareable insights.

- Scorecard: Define and track organizational goals.

Develop data

Create and build software, applications, and data solutions.

- API for GraphQL: Connect apps to Fabric data sources via GraphQL.

- Environment: Set up Spark resources for development.

- Notebook: Develop analytical and ML solutions using various languages.

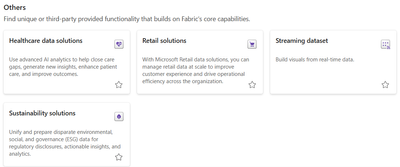

Others

Find unique or industry-specific functionality that extends Fabric’s capabilities.

- Healthcare data solutions: Leverage AI to improve healthcare insights and patient outcomes.

- Retail solutions: Scale and analyze retail data to enhance customer experiences.

- Streaming dataset: Build visuals directly from real-time data streams.

- Sustainability solutions: Unify ESG data for disclosures and analytics.

Why It Matters?

This unified approach breaks silos. You no longer have to juggle different tools for data storage, preparation, modeling, and visualization. Instead, Power BI Workspaces in Fabric act as your single pane of glass for the entire data lifecycle.

“From data ingestion to actionable dashboards – everything lives in one workspace.”

Proud to be a Microsoft Fabric community super user

Let's Connect on LinkedIn

Subscribe to my YouTube channel for Microsoft Fabric and Power BI updates.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

- Power BI Enhancements You Need to Know – Part 1: T...

- 🚀 Creating Real-Time Dashboards with Power BI: A ...

- Custom Visualization of a 100% Stacked Bar Chart i...

- Microsoft Fabric vs Power BI Licensing: What You N...

- Preview TMDL Script Changes with the TMDL View in ...

- Power BI Conditional Column Tutorial:No Custom Col...

- Boost Collaboration in Power BI Desktop Using GitH...

- Semantic Layer as a UX Strategy: Rethinking Power ...

- Unlocking the Power of Microsoft Fabric in Power B...

- Power BI Performance Engineering: Model Optimizati...

- Kcpraveenkumar on: Microsoft Fabric vs Power BI Licensing: What You N...

-

Olayemi_Awofe

on:

Power BI Conditional Column Tutorial:No Custom Col...

on:

Power BI Conditional Column Tutorial:No Custom Col...

-

Olayemi_Awofe

on:

Power BI Performance Engineering: Model Optimizati...

on:

Power BI Performance Engineering: Model Optimizati...

-

Fany_estralar

on:

🏆 Fabric Community DataViz Contest | Winner and J...

on:

🏆 Fabric Community DataViz Contest | Winner and J...

-

technolog

on:

Meet the judges of our Fabric Community DataViz Co...

technolog

on:

Meet the judges of our Fabric Community DataViz Co...

- missresources on: Build a Dynamic Loan Calculator in Power BI with W...

-

andrewsommer

on:

Comparing Semantic Modelling Options in Fabric

on:

Comparing Semantic Modelling Options in Fabric

-

AlexisOlson

on:

Bulk Move Measures into Folders Using TMDL View in...

AlexisOlson

on:

Bulk Move Measures into Folders Using TMDL View in...

- local5784 on: How to Create a Measure Table in Power BI – Best P...

-

Abhilash_P

on:

Create Calendar Table in One Click using TMDL View...

on:

Create Calendar Table in One Click using TMDL View...

-

How to

704 -

Tips & Tricks

682 -

Events

139 -

Support insights

121 -

Opinion

94 -

DAX

66 -

Power BI

65 -

Power Query

62 -

Power BI Dev Camp

45 -

Power BI Desktop

40 -

Roundup

39 -

Power BI Embedded

20 -

Featured User Group Leader

20 -

Dataflow

19 -

Time Intelligence

19 -

Tips&Tricks

18 -

PowerBI REST API

12 -

Data Protection

10 -

Power BI Service

8 -

Power Query Tips & Tricks

8 -

finance

8 -

Direct Query

7 -

Power BI REST API

6 -

Auto ML

6 -

financial reporting

6 -

Data Analysis

6 -

Power Automate

6 -

Data Visualization

6 -

Python

6 -

powerbi

5 -

service

5 -

Power BI PowerShell

5 -

Machine Learning

5 -

Income Statement

5 -

Dax studio

5 -

External tool

4 -

Paginated Reports

4 -

Power BI Goals

4 -

PowerShell

4 -

Desktop

4 -

Bookmarks

4 -

Group By

4 -

Line chart

4 -

community

4 -

RLS

4 -

M language

4 -

R script

3 -

Aggregation

3 -

calendar

3 -

Gateways

3 -

R

3 -

M Query

3 -

R visual

3 -

Webinar

3 -

CALCULATE

3 -

Reports

3 -

PowerApps

3 -

Data Science

3 -

Azure

3 -

Data model

3 -

Conditional Formatting

3 -

Life Sciences

3 -

Visualisation

3 -

Administration

3 -

M code

3 -

SQL Server 2017 Express Edition

3 -

Visuals

3 -

Row and column conversion

2 -

Python script

2 -

Nulls

2 -

DVW Analytics

2 -

Industrial App Store

2 -

Week

2 -

Date duration

2 -

parameter

2 -

Weekday Calendar

2 -

Support insights.

2 -

construct list

2 -

Formatting

2 -

SAP

2 -

Power Platform

2 -

Workday

2 -

external tools

2 -

slicers

2 -

RANKX

2 -

index

2 -

Date Dimension

2 -

Integer

2 -

PBI Desktop

2 -

Power BI Challenge

2 -

Query Parameter

2 -

Visualization

2 -

How Things Work

2 -

Tabular Editor

2 -

Date

2 -

SharePoint

2 -

Power BI Installation and Updates

2 -

ladataweb

2 -

Troubleshooting

2 -

Date DIFF

2 -

Transform data

2 -

Healthcare

2 -

rank

2 -

Tips and Tricks

2 -

Incremental Refresh

2 -

Query Plans

2 -

Power BI & Power Apps

2 -

Random numbers

2 -

Day of the Week

2 -

Number Ranges

2 -

calculated column

2 -

M

2 -

hierarchies

2 -

Power BI Anniversary

2 -

Language M

2 -

Custom Visual

2 -

VLOOKUP

2 -

pivot

2 -

Date Comparison

2 -

Power BI Premium Per user

2 -

inexact

2 -

Split

2 -

Forecasting

2 -

REST API

2 -

Editor

2 -

Paginated Report Builder

2 -

Working with Non Standatd Periods

2 -

powerbi.tips

2 -

Custom function

2 -

Reverse

2 -

measure

2 -

Microsoft-flow

2 -

PUG

2 -

Custom Measures

2 -

Filtering

2 -

newworkspacepowerbi

1 -

Performance KPIs

1 -

HR Analytics

1 -

keepfilters

1 -

Connect Data

1 -

Financial Year

1 -

Schneider

1 -

dynamically delete records

1 -

Copy Measures

1 -

Friday

1 -

Table

1 -

Natural Query Language

1 -

Infographic

1 -

automation

1 -

Prediction

1 -

Active Employee

1 -

Custom Date Range on Date Slicer

1 -

refresh error

1 -

PAS

1 -

certain duration

1 -

DA-100

1 -

bulk renaming of columns

1 -

Single Date Picker

1 -

Monday

1 -

PCS

1 -

Saturday

1 -

Q&A

1 -

Event

1 -

Custom Visuals

1 -

Free vs Pro

1 -

Format

1 -

CICD

1 -

Current Employees

1 -

date hierarchy

1 -

relationship

1 -

SIEMENS

1 -

Multiple Currency

1 -

Power BI Premium

1 -

On-premises data gateway

1 -

Binary

1 -

Power BI Connector for SAP

1 -

Sunday

1 -

update

1 -

Slicer

1 -

Visual

1 -

forecast

1 -

Regression

1 -

sport statistics

1 -

Intelligent Plant

1 -

Circular dependency

1 -

GE

1 -

Exchange rate

1 -

Dendrogram

1 -

range of values

1 -

activity log

1 -

Decimal

1 -

Charticulator Challenge

1 -

Field parameters

1 -

Training

1 -

Announcement

1 -

Features

1 -

domain

1 -

pbiviz

1 -

Color Map

1 -

Industrial

1 -

Weekday

1 -

Working Date

1 -

Space Issue

1 -

Emerson

1 -

Date Table

1 -

Cluster Analysis

1 -

Stacked Area Chart

1 -

union tables

1 -

Number

1 -

Start of Week

1 -

Tips& Tricks

1 -

deployment

1 -

ssrs traffic light indicators

1 -

SQL

1 -

trick

1 -

Scripts

1 -

Extract

1 -

Topper Color On Map

1 -

Historians

1 -

context transition

1 -

Custom textbox

1 -

OPC

1 -

Zabbix

1 -

Label: DAX

1 -

Business Analysis

1 -

Supporting Insight

1 -

rank value

1 -

Synapse

1 -

End of Week

1 -

Tips&Trick

1 -

Workspace

1 -

Theme Colours

1 -

Text

1 -

Flow

1 -

Publish to Web

1 -

Power BI On-Premise Data Gateway

1 -

patch

1 -

Top Category Color

1 -

A&E data

1 -

Previous Order

1 -

Substring

1 -

Wonderware

1 -

Power M

1 -

Format DAX

1 -

Custom functions

1 -

accumulative

1 -

DAX&Power Query

1 -

Premium Per User

1 -

GENERATESERIES

1 -

Showcase

1 -

custom connector

1 -

Waterfall Chart

1 -

step by step

1 -

Top Brand Color on Map

1 -

Tutorial

1 -

Previous Date

1 -

XMLA End point

1 -

color reference

1 -

Date Time

1 -

Marker

1 -

Lineage

1 -

CSV file

1 -

conditional accumulative

1 -

Matrix Subtotal

1 -

Check

1 -

null value

1 -

Report Server

1 -

Audit Logs

1 -

analytics pane

1 -

mahak

1 -

pandas

1 -

Networkdays

1 -

Button

1 -

Dataset list

1 -

Keyboard Shortcuts

1 -

Fill Function

1 -

LOOKUPVALUE()

1 -

Tips &Tricks

1 -

Plotly package

1 -

Excel

1 -

Cumulative Totals

1 -

Report Theme

1 -

Bookmarking

1 -

oracle

1 -

powerbi argentina

1 -

Canvas Apps

1 -

total

1 -

Filter context

1 -

Difference between two dates

1 -

get data

1 -

OSI

1 -

Query format convert

1 -

ETL

1 -

Json files

1 -

Merge Rows

1 -

CONCATENATEX()

1 -

take over Datasets;

1 -

Networkdays.Intl

1 -

refresh M language Python script Support Insights

1 -

Sameperiodlastyear

1 -

Office Theme

1 -

matrix

1 -

bar chart

1 -

Measures

1 -

powerbi cordoba

1 -

Model Driven Apps

1 -

REMOVEFILTERS

1 -

XMLA endpoint

1 -

translations

1 -

OSI pi

1 -

Parquet

1 -

Change rows to columns

1 -

remove spaces

1 -

Get row and column totals

1 -

Governance

1 -

Fun

1 -

Power BI gateway

1 -

gateway

1 -

Elementary

1 -

Custom filters

1 -

Vertipaq Analyzer

1 -

DIisconnected Tables

1 -

Sandbox

1 -

Honeywell

1 -

Combine queries

1 -

X axis at different granularity

1 -

ADLS

1 -

Primary Key

1 -

Microsoft 365 usage analytics data

1 -

Randomly filter

1 -

Week of the Day

1 -

Azure AAD

1 -

Retail

1 -

Power BI Report Server

1 -

School

1 -

Cost-Benefit Analysis

1 -

ISV

1 -

Ties

1 -

unpivot

1 -

Practice Model

1 -

Continuous streak

1 -

ProcessVue

1 -

Create function

1 -

Table.Schema

1 -

Acknowledging

1 -

Postman

1 -

Text.ContainsAny

1 -

Power BI Show

1 -

Get latest sign-in data for each user

1 -

query

1 -

Dynamic Visuals

1 -

KPI

1 -

Intro

1 -

Icons

1 -

variable

1 -

Issues

1 -

function

1 -

stacked column chart

1 -

ho

1 -

ABB

1 -

KNN algorithm

1 -

List.Zip

1 -

optimization

1 -

Artificial Intelligence

1 -

Map Visual

1 -

Text.ContainsAll

1 -

Tuesday

1 -

API

1 -

Kingsley

1 -

Merge

1 -

financial reporting hierarchies RLS

1 -

Featured Data Stories

1 -

MQTT

1 -

Custom Periods

1 -

Partial group

1 -

Reduce Size

1 -

FBL3N

1 -

Wednesday

1 -

help

1 -

group

1 -

Scorecard

1 -

Json

1 -

Tops

1 -

Multivalued column

1 -

Pipeline

1 -

Path

1 -

Yokogawa

1 -

Dynamic calculation

1 -

Data Wrangling

1 -

native folded query

1 -

transform table

1 -

UX

1 -

Cell content

1 -

General Ledger

1 -

Thursday

1 -

Power Pivot

1 -

Quick Tips

1 -

data

1 -

PBIRS

1 -

Usage Metrics in Power BI

1

- 06-08-2025 - 06-09-2025

- 06-01-2025 - 06-07-2025

- 05-25-2025 - 05-31-2025

- 05-18-2025 - 05-24-2025

- 05-11-2025 - 05-17-2025

- 05-04-2025 - 05-10-2025

- 04-27-2025 - 05-03-2025

- 04-20-2025 - 04-26-2025

- 04-13-2025 - 04-19-2025

- 04-06-2025 - 04-12-2025

- 03-30-2025 - 04-05-2025

- 03-23-2025 - 03-29-2025

- 03-16-2025 - 03-22-2025

- 03-09-2025 - 03-15-2025

- View Complete Archives